How ABT Chain Networks Are Built Using AWS Spot Instances

2019-03-31

Author: Tyr Chen (VP of Engineering, ArcBlock)

Editor: Matt McKinney

On Friday, March 29th, the ABT Network was officially released. The ABT Network re-defines the next-generation blockchain infrastructure by connecting the network of interconnected blockchains in a completely decentralized manner by using cloud nodes and interconnecting chains to create the network. This article reviews some of the more interesting experiences we had over the last few months as we moved from idea to public release including some of the experimentation, roadblocks, and successes our team had along the way.

To help you get the most out of this article let’s introduce a few basic concepts:

- ABT Network: Multiple networks of blockchains built using ArcBlock technology.

- ABT Chain Node: ArcBlock’s blockchain “node” created by the Forge Framework.

- Forge Framework: A complete development framework that includes everything needed to build and run DApps.

Anyone Can Deploy a Node

When developing the Forge Framework and ABT Chain Node, we believe that it can be used for large-scale applications with millions of transactions per day, or it can be used for DIY projects or small independent development projects. So, on the ABT Network, the ABT Node can be big, small or something in between. Our goal is to ensure that it works for most uses cases and requirements delivering a premium user experience regardless of server size.

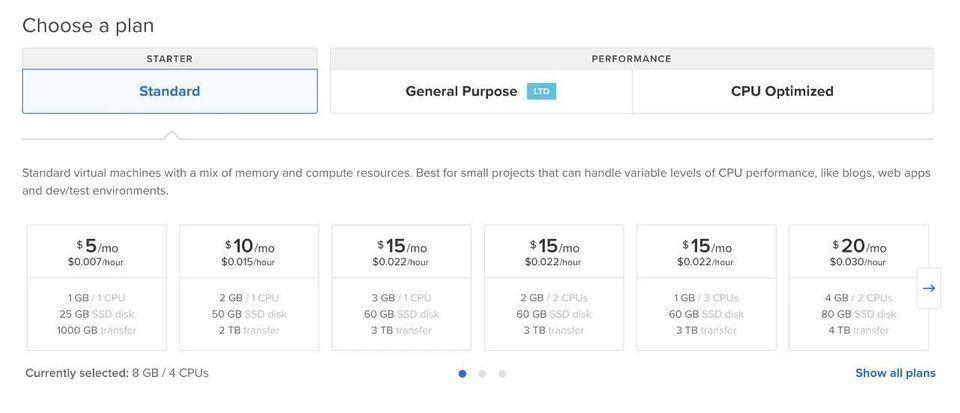

As an example, we targeted a node spend of $15 for a single node. On Digital Ocean, this corresponds to the following:

So, with the $15/month budget, this equates to 1GB / 1CPU / 25GB disk all the way to the 2GB / 2CPU / 60GB hosting plans.

During January and February, most of our development testing used the $5 Extreme Edition host and deployed nodes in the West (SF), East (NY), Western Europe (London) and Southeast Asia ( Singapore) to form a P2P network to develop Forge. By creating this very limited environment, we are able to test and ensure that our software is robust and usable by exposing all kinds of problems in advance.

With the network setup, we need to have enough traffic to simulate real-world use cases. To this end we also developed a simulator that uses an internally developed description language to describe how we started the simulation:

By changing the variables we can easily adjust different elements of our testing including consensus, throughput and so on. We can also create different scenarios by adding more simulations that change the diversity of traffic.

Once the simulator was started our development network crashed for three straight days including issues like out of memory, too many open files, gen_server timeout, and TCP send/receive buffer Full.

If we simply replaced the nodes with larger capacity the likelihood of these issues occurring goes to zero. However, our goal was to take the initiative to let it happen in the development environment so that most of the problems can be identified and handled properly. For example, we found that the consensus engine we used was unstable, crashing from time to time, and it was easy to write the state DB (state database) after the crash, causing the node to crash completely and fail to recover. In this regard, our approach is that once the consensus engine crashes, we let Forge automatically crash, and then we will restart forge using the forge starter we developed. After rebooting, we go back to the data of the most recent block and reapply. If the consensus engine can be restored, the old one will continue to go backward; otherwise, it will continue to crash and continue backtracking.

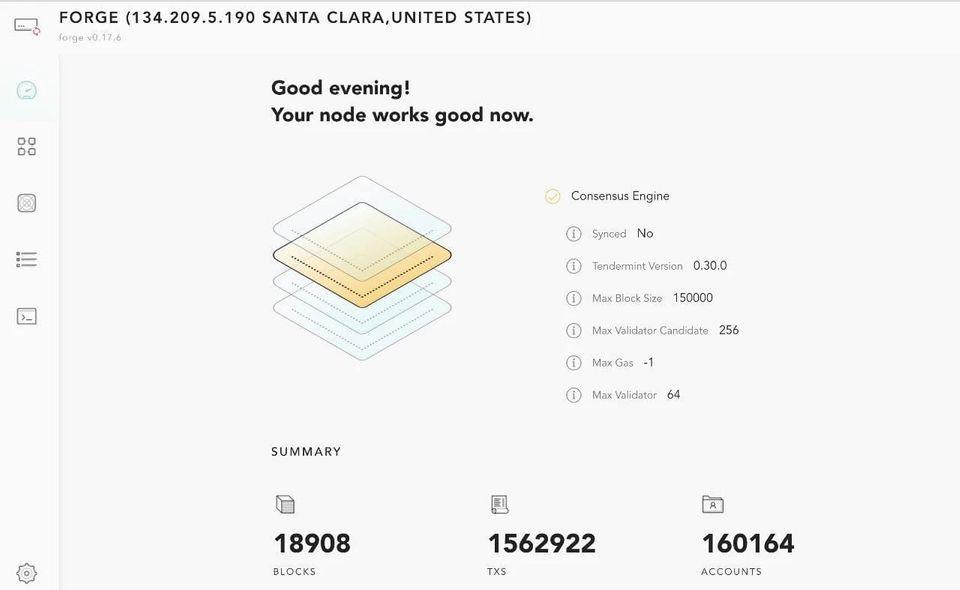

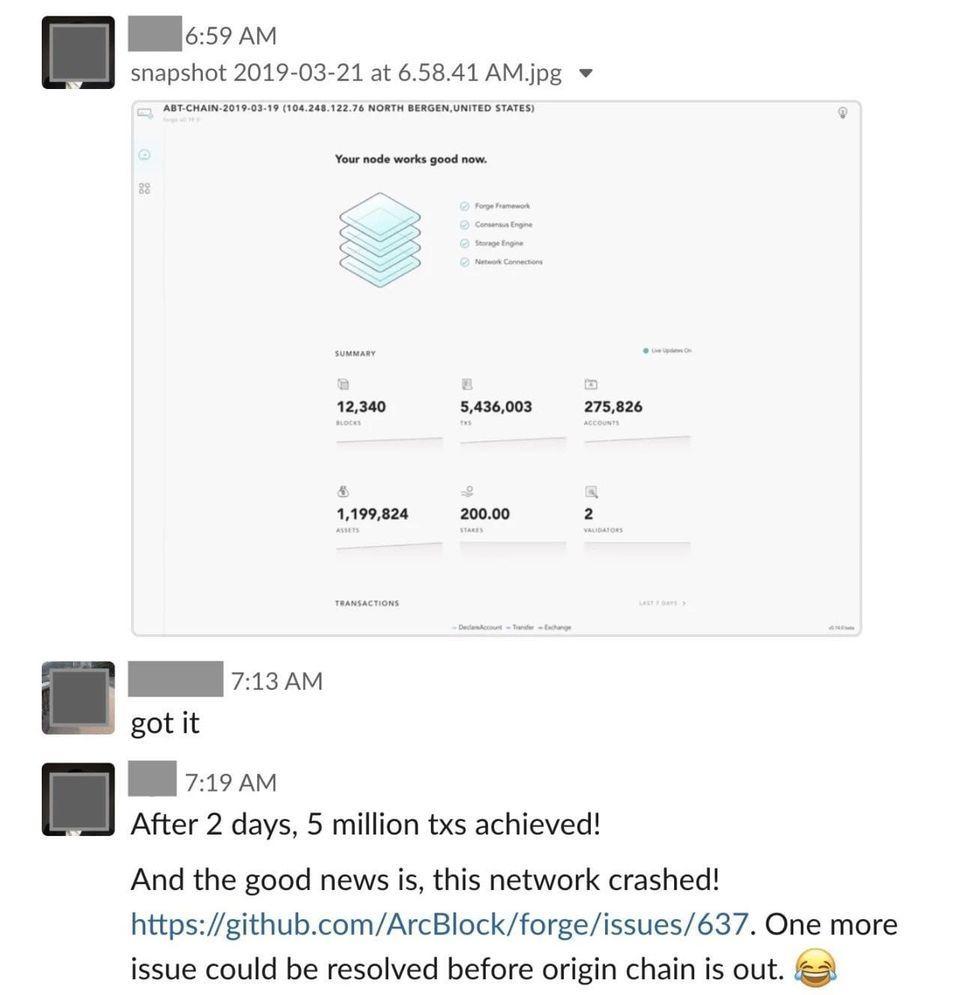

In such a harsh environment, Forge has gradually grown and the network of nodes continues to die and then are reborn. For anyone who has seen Tom Cruise in the “Tomorrow’s Edge”, this concept is very similar to Forge — learn, fail and start again. The nodes are able to survive longer and longer with each iteration even with the very limited resources. Even with all the improvements, the good times don’t last. Once we get near 1.5M TXs, the network crashes again:

This particular crash caused the nodes to be completely destroyed, and even ssh no longer worked to access the nodes. At this point, our monitoring for the Digital Ocean nodes shows that the CPU is 0 and the issue was quickly identified as a disk full issue. All 25GB were used up. So, we took a snapshot and moved to the next stage.

In early March we abandoned the $5/month cloud machines and replaced them with the $15/month “big” node. In our Digital Ocean account, we ran several networks at the same time and did rolling upgrades. When we first started we had a milestone a week and by the second week of March, we had a version every day. Now that we updated the nodes on the network, our milestone of 1 million TXs was replaced by our next milestone of 5 million TXs.

From there, we quickly surpassed 6 million TXs, then 7 million TXs until we finally implemented some breaking changes that stopped the clock.

ArcBlock’s engineering team is doing a lot of industry leading development work as demonstrated by our work to make blockchain nodes stable on a small $15/month cloud machine. Over the past year, we have worked with other vendors public chain nodes — often with recommended configurations where a cloud server costs more than a $1000/month.

If an application developer wants to deploy a chain of their own that initially serves their users through their own nodes (we assume that the nodes are deployed in four regions of the world two nodes per region) the cost for that developer is in the tens of thousands of dollars per month. Any small development companies, developers or users won’t have enough money to support their project. Our goal was to reduce this number by 100x and ensure that a developer can build a blockchain project for as little as a few hundred dollars a month.

The above example demonstrates one type of use case. What about enterprise-ready DApps that need to be deployed in a production environment with very larger cloud servers? ArcBlock’s nodes and environments are also enterprise ready designed to support applications that are well beyond the use of even the largest DApps available. ArcBlock’s own ABT Network is running on Amazon Web Services and we have already partnered with the leading cloud providers like AWS, Azure, and IBM with available images for ABT Nodes to spin up your own environment easily. Let’s take a look at setting up our own ABT Network in more detail.

Simple but not simple production environment

Since the ABT network emphasizes interconnecting chains into a network, we’ve started with three elements of the periodic table named after the elements “Argon”, “Bromine” and “Titanium” (where Bromine is a test that runs the latest nightly build version). With these three chains, we need to prepare a safe and credible production environment for these three chains.

Our production environment includes the following requirements:

- Each chain is deployed to four regions in the Asia Pacific and European regions;

- Argon and Titanium each include sixteen nodes; Bromine uses four nodes;

- All nodes expose only the p2p port;

- The node’s GraphQL RPC and its own block browser allow external access via ELB, while gRPC only allows local access;

- Each region, the domain name of the ELB of each chain, is load balanced by route 53 according to latency.

To help manage all of these deployments we use a significant amount of automation using Ansible and Terraform. As with anything we do, we try to find ways to reduce costs while simultaneously improving production levels for our environments.

Using the above configuration if we only use the inexpensive c4.large / c5.large, each node using 110G EBS, each chain is equipped with an ELB in each area, the fixed cost for one month is $3721.

Calculation formula: 0.11 (c4.large price) x 36 x 24 x 31 + 36 x 110 x 0.12 (EBS price) + 25 (ELB price) x 12

Among them, EC2 costs are the big one — close to $3000/month.

So, is there anything we can do to ensure our production ready environment maintains our availability standards while also reducing our costs? The answer in AWS is actually straightforward with several small caveats.

Our answer — Spot Instances. The premise of Spot Instances is straight forward. Users have the option to purchase unused Amazon EC2 capacity at highly reduced rates. These EC2 instances are the same as regular EC2 instances but have the potential to be interrupted at any time.

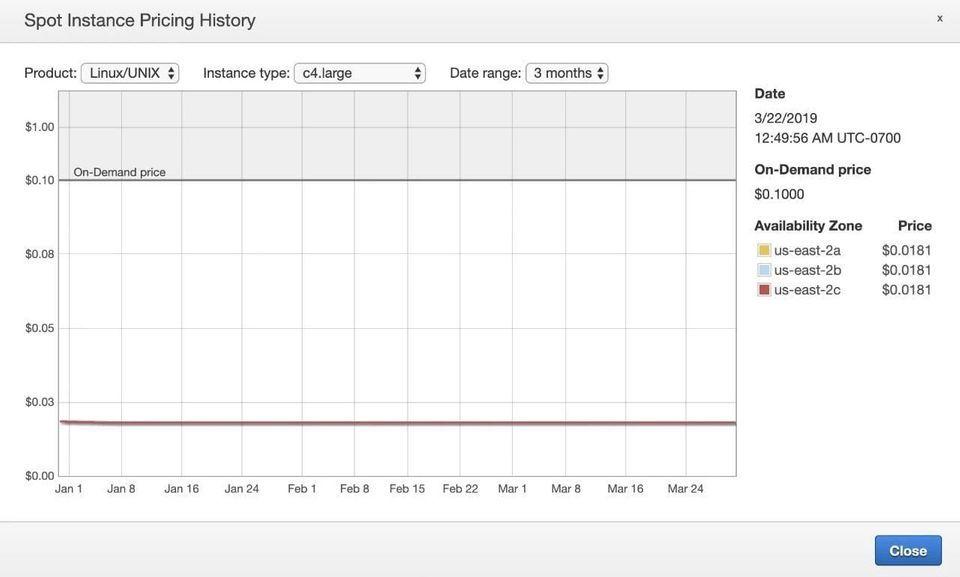

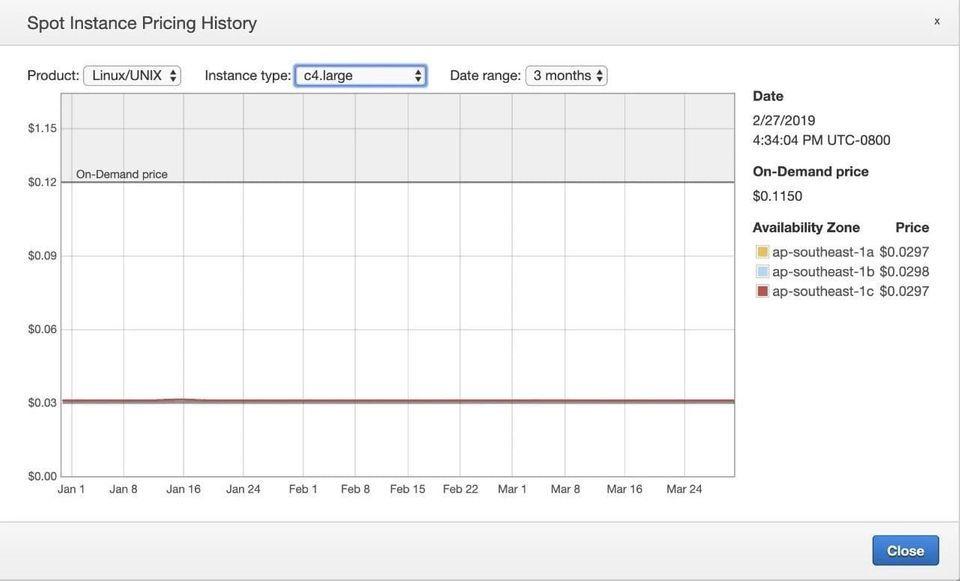

The following image shows the price trend of spot instance in us-east-2 and ap-southeast-1:

By simply using Spot Instances we can reduce the cost to $600 per month, or a total price of $1300 per month compared to $3000/month+ listed above. So the cost looks great, but with spot instances what happens if the instance is terminated? How can one restore services as soon as possible? And how can we reduce development cycles so the machines are switched and brought online automatically?

To overcome this limitation we separated the root disks and the data disks. All the data stored by the Forge is placed in the data disk. The configuration of the Forge, the private key of the node, the private key of the certifier, the root disk, and then backed up to an AES encryption after initialization. Only a single write to the S3 bucket is allowed. After that, when the node is running, each area of each chain periodically backs up the data disk of a healthy node. Thus, when the certifier node is killed, we can recover the data disk from the most recent backup and then retrieve the private key and configuration of the certifier node from S3.

We believe the idea is quite simple and intuitive but does require some planning to make it work. In the case of the ABT Network, we have tested, validated and are using spot instances today to run our production ready environments. It is feasible and for dApp developers or other peers in the blockchain, this way of using spot instance to run blockchain nodes can be used as a reference design.

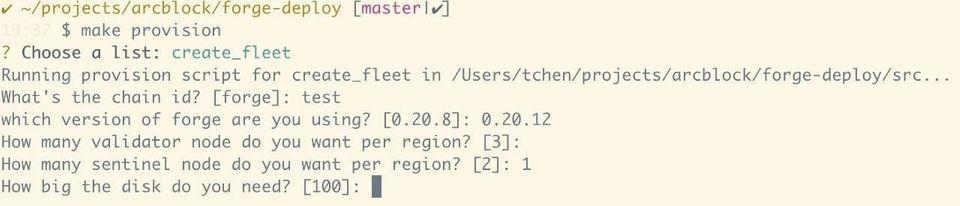

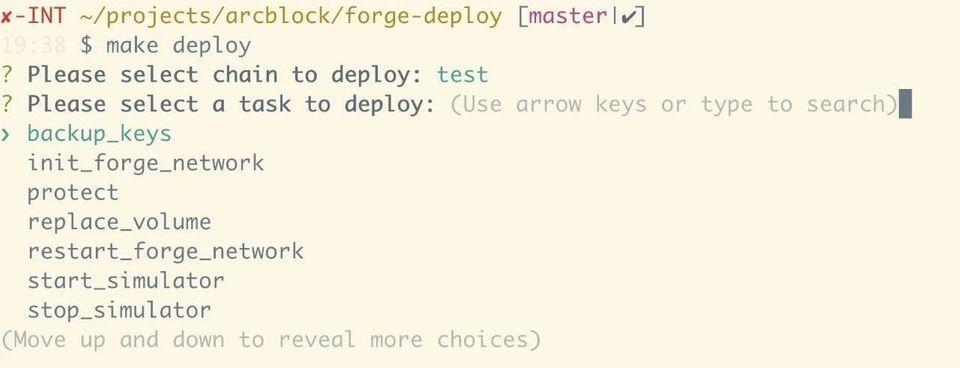

To ensure that the spot instances work as described our deployment script forge-deploy is divided into four parts:

- Only one-time scripts are needed: for example, creating a security group for each VPC in each region;

- Script for making Forge AMI: Every time we release a new version, we create a new AMI;

- Create a script for the resources needed for the new chain: create a spot request, EBS, create an ELB, target group, set listener (and listener rules), create a domain name and domain name resolution policy;

- Manage a script of an existing chain: such as initializing a chain, restarting a node, upgrading a node, repairing a damaged node, adding a new node, etc.

During the last two weeks of March, our engineers developed and tested forge-deploy based on the original fragmented scripts ( The scripts for Digital Ocean machines). Our daily routine is: create a chain, destroy it; create another one, then destroy that one, again and again. In these two weeks, we solved problems that might take most blockchain teams a whole year to solve. At the peak of our deployment, there were six chains running in parallel. In total, we created and destroyed over thirty chains, including those that only lived for one day: abtchain, origin, bigbang, test, abc … Please note that here by “chains”, we mean cross-region chains consists of multiple nodes, not single-node chains.

Due to the confidence we gained from the previous experience, on the day that ABT Network was supposed to go live, we destroyed the three chains: less than 30 minutes before the final countdown, so that we can recreate them and let the whole community witness how the first block is created. Despite that scripts ran more slowly than we expected, twenty minutes later after the countdown, all three chains: Argon, Bromine, and Titanium all went live. It only takes two commands to deploy a chain:

Among them, create_fleet will do these things in four areas:

- Get the default VPC id of the current zone

- Get the subnet id of the VPC

- Get the id of several security groups created in advance

- Apply a spot fleet for the certifier node with the default configuration

- Apply spot fleet for sentinel nodes with preset configuration

- Wait for all applied instances to work properly

- Create ELB

- Create a target group and add all instances to the target group

- Obtain the pre-uploaded certificate id

- Create two ELB listeners, port 80 is directly 301 to 443, and port 443 forwards traffic to the target group

- Create a DNS domain record and set the latency based policy

Once all four regions have been completed, create an ansible inventory for all instances of the chain for subsequent processing.

Next, in initforgenetwork, you will do these things:

- Mount the data disk to the corresponding instance and format the file system to XFS

- Start Forge with a temporary configuration file, generate node key and validator key

- Back up the generated key to S3

- Find the certifier node based on the inventory file and write its validator address to the genesis configuration

- Start forge

After all the nodes are up, waiting for a moment, a chain is born!

If you want to learn more about the ABT Network, you can visit https://www.abtnetwork.io.